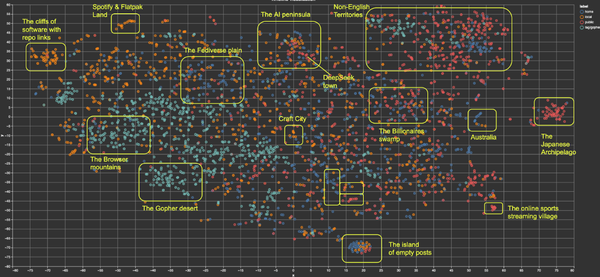

Introducing any-guardrail: A common interface to test AI safety models

Building AI agents is hard, not just due to LLMs, but also because of tool selection, orchestration frameworks, evaluation, safety, etc. At Mozilla.ai, we’re building tools to facilitate agent development, and we noticed that guardrails for filtering unsafe outputs also need a unified interface.