Building in the Open at Mozilla.ai: 2025 Year in Review

The year 2025 has been a busy one at Mozilla.ai. From hosting live demos and speaking at conferences, to releasing our latest open-source tools, we have made a lot of progress and more exploration this year.

A Year of Building Momentum

The year 2025 has been a busy one at Mozilla.ai. From hosting live demos and speaking at conferences, to releasing our latest open-source tools, we have made a lot of progress and more exploration this year. We put extra emphasis on building, learning, and showing up (literally), not to mention, the in-person gatherings helped us cultivate stronger collaboration and a clearer direction.

While we were busy making updates and releases to our Choice-first Stack (our open-source tools), we also decided to build something new: an Agent Platform geared towards non-technical teams and professionals, built on open foundations (i.e. our modular agentic stack).

Growing Alongside the Work

Since this year had a lot more building involved, it meant the scope of the work grew, and so did the team. We’ve added new team members that span across different departments including engineering, developer relations, and product/go-to-market.

With more hands onboard and broader experience, it has allowed us to ship more confidently and support a wider range of initiatives throughout the year.

Workweeks and More IRL Events

Remote work has its perks but there’s nothing like a good ol’ fashioned in-person event. Meeting new people and fellow colleagues in a physical environment reinforced the value of spending time together in person. Our first workweek took place in sunny Málaga, Spain in March, followed by our second workweek in charming Lisbon, Portugal in October.

These moments created space for better alignment and stronger relationships across the team. We connected through team-building activities, casual hangouts, coded, shared ideas and even stories. By the end of the year, we’ve grown into a more connected team and on track to support the pace of building ahead.

Staying Close to the Developer Community

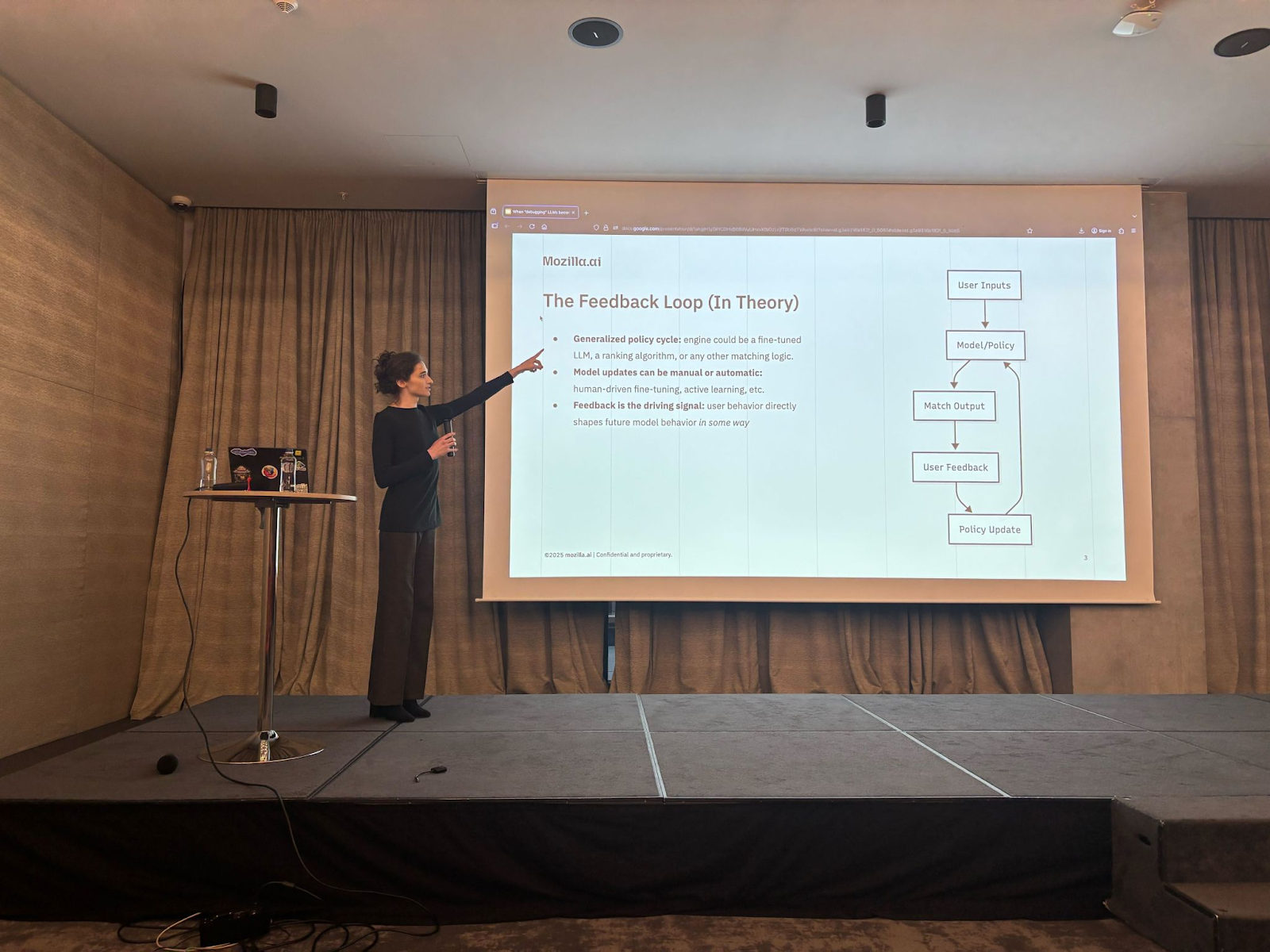

In addition to our in-person events, we’ve also stayed closely connected to the developer and open-source community. Many of our team members have attended and participated in various conferences, podcasts, and community events.

Here are a few places where those conversations happened:

- FOSDEM 2025

- Stockholm AI

- Universitat Politècnica de València

- Vertical AI Agents

- OxML

- FediForum (watch the demo by Davide, ML Engineer @ Mozilla.ai)

- Open Data Science Conference

- Agentic AI Summit (watch the session by John, our CEO)

- AI_dev Open Source

- DataFest Tbilisi

- Credo AI Trust Summit

- Pittsburgh TechFest

- SFSCON (watch the presentation by Davide)

We’ve also hosted a couple live demos on the Mozilla Discord server, interactive sessions around the Agent Platform, and appearances on these podcasts:

- Outside the Fox (Nathan Brake, ML Engineer @ Mozilla.ai)

- Stack Overflow (John Dickerson, CEO @ Mozilla.ai)

- Why Thinking Small Is the Future of AI (Davide Eynard, ML Engineer @ Mozilla.ai)

Through these events and conversations, we gained valuable insights and helped shape how our tools evolved throughout the year. Builders, researchers, and domain experts influenced us on what to prioritize and what to simplify.

We also spent time outside traditional developer circles. Events like TAUS (translation and localization) exposed us to industries undergoing their second major AI disruption, where the questions are no longer “can this work?” but “how does this fit into production workflows, trust models, and real business constraints?”

That perspective carried into Mozilla Festival (MozFest), three days filled with creative energy, collaboration, and thoughtful conversations about shaping a better internet and the future of open-source AI. It also showed up at NeurIPS 2025, where open-source AI clearly crossed a new threshold. Small models are no longer seen as a compromise, agentic systems are maturing (even if unevenly), and interest from enterprises, governments, and investors is becoming harder to ignore. We’re working on a deeper reflection with John, our CEO, on how all of these topics will shape Mozilla.ai’s direction, so keep an eye on the blog.

These conversations reinforced our focus on practical agentic systems, small models, and tools that adapt to existing processes rather than replacing them wholesale.

Speaking of builders, we also launched a new program called Builders in Residence. The main goal of this initiative is to work closely with practical, application-driven researchers who care deeply about open-source AI. It’s a three-month fellowship (with an option to extend based on project scope) and fully remote (with global participation), the BiR program supports open research, hands-on collaboration, and work that feeds directly back into the open-source AI ecosystem.

All Aboard the Mozilla AI Ship

The Blueprints Hub continued to grow over time, with around 10 blueprints published by the team and the community. It gained traction as a place for hands-on, real-world workflows that help developers move from ideas to working prototypes. It has played an important role in shaping how our newer tools and workflows were approached.

A few examples from the Blueprints Hub include:

- Create your own tailored podcast using your documents

- Build your own local social media timeline algorithm

- Run AI agents directly in the browser using WebAssembly (WASM)

Lumigator also became available for broader use. It became a tool people could rely on, helping teams evaluate and compare language models with less guesswork. Built with transparency and usability in mind, Lumigator made model selection feel more approachable, and the lessons from its use continue to show up in how evaluation and benchmarking are handled across the stack.

Which now brings the focus on the Choice-first Stack. Five open-source libraries shipped this year, laying the groundwork for a more flexible way to build, test, and run AI systems.

Here’s what that work turned into:

- any-agent (compare and run any agent framework from one interface)

- any-llm (a library that provides a single interface to various LLMs)

- any-llm-gateway (track Usage, set Limits, and deploy across any LLM provider)

- any-llm-platform (a secure cloud vault and usage-tracking service for all your LLM providers)

- any-guardrail (a common interface for guardrail models)

- mcpd (a tool to declaratively manage Model Context Protocol (MCP) servers)

- mcpd-proxy (centralized tool access for AI agents)

- encoderfile (open-source deployment format that compiles tokenizers and model weights into self-contained, single-binary executables)

In a nutshell, these tools form the Choice-first Stack that helps devs work across different LLMs, manage access and cost, and test safety assumptions without tying themselves to a single provider or approach.

We’ve also adopted the llamafile project; continuing work toward local, privacy-first AI that runs closer to users and their data.

Building on the open-source tools, we also took a step toward supporting non-technical teams. In October 2025, our team announced the alpha of the Agent Platform, where teams describe their goals and adaptive AI agents work with existing tools and processes, learning context and improving over time. The beta waitlist opens in early January 2026, so stay tuned for more details.

The any-llm platform also entered alpha this year, with early testers exploring secure key management and usage tracking across providers. Both platforms are being shaped in close collaboration with early testers, turning real feedback into real improvements as they move toward broader release.

What’s Next in 2026

The year brought steady progress across products, community, and the team. And did we mention that we also revamped the website? Special thanks to Weedoo Creative for helping bring it to life and giving our work a clearer, more welcoming home.

We’ll have a lot more up our sleeves in 2026, meaning more to explore, more to refine, and plenty to be shaped in the open. The building continues, and so do the conversations.

Last but not least, we’d also like to thank YOU — for using our tools, joining our conversations, sharing feedback, and happily building alongside us this year. We’re excited to say the least and look forward to what’s ahead!