Introducing any-llm managed platform: A secure cloud vault and usage-tracking service for all your LLM providers

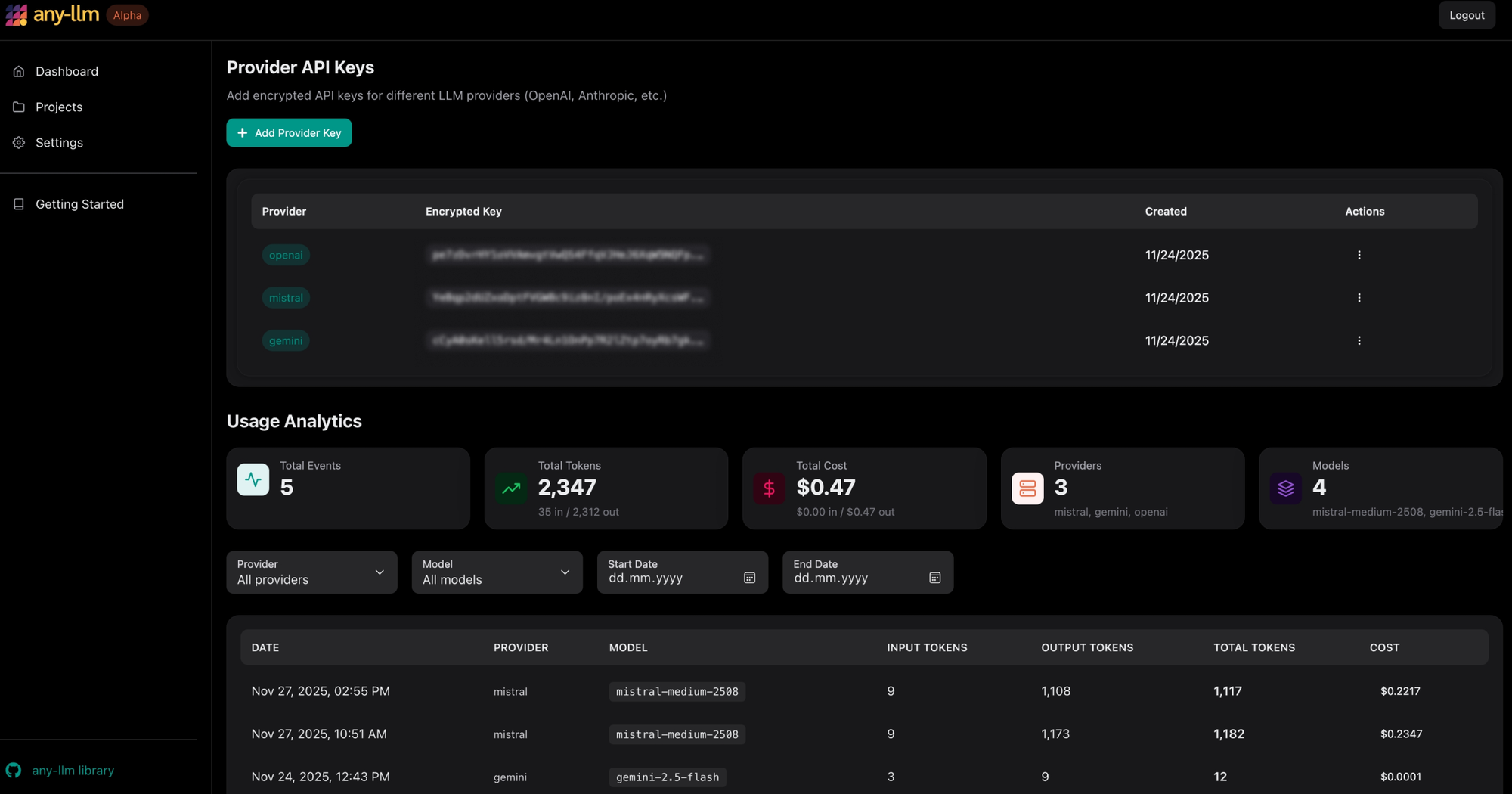

any-llm managed platform adds end-to-end encrypted API key storage and usage tracking to the any-llm ecosystem. Keys are encrypted client-side, never visible to us, while you monitor token usage, costs, and budgets in one place. Supports OpenAI, Anthropic, Google, and more.

We recently released any-llm v1.0, our SDK for building with multiple providers using a single unified interface, as well as any-llm-gateway. Today we're expanding the ecosystem with an end-to-end encrypted API-key vault and usage-tracking service that works across all your LLM providers. It encrypts your API keys securely client-side, and tracks usage without ever seeing your prompts or responses.

Because it integrates natively with any-llm, the any-llm Managed Platform gives you centralized key management, unified cost tracking, and secure usage analytics across both cloud and local providers. API keys are encrypted before they leave your device, and cannot be decrypted by us, ever.

Key Features

End-to-end Encryption: Your API Keys are encrypted with a key pair generated client-side in your browser. We store only encrypted data.

Cost Tracking: See real-time spending across OpenAI, Anthropic, Google, and other providers in a unified dashboard. Identify expensive queries and compare costs across models.

API Key Budgeting: Define budgets for your API keys to limit access and automatically cap requests when the spending limit is reached.

Privacy-First Usage Analytics: Track token usage, model performance, and costs without exposing your prompts or responses.

Multi-Provider Support: any-llm managed platform works with all major LLM providers supported by any-llm, including OpenAI, Anthropic, Google, and more. Store all your provider keys in one encrypted vault and organize them by project.

Project-Based Organization: Create multiple projects to organize your API keys by team, application, or environment. Each project can have its own set of provider keys with isolated usage tracking.

SDK & CLI Integrations: Access your provider keys programmatically from any-llm and any-llm-gateway, using one single virtual key, without storing credentials in your code. The cryptographic challenge system ensures secure, one-time-use authentication for SDK and CLI integrations.

End-to-End Encryption

What makes any-llm managed platform unique is its end-to-end encrypted architecture.

When you first set up your account, a key pair is generated entirely in your browser. Your private key never touches our servers. Instead, you download it as an ANY_LLM_KEY file that stays on your device. Your public key is uploaded to our servers.

When you add provider API keys (OpenAI, Anthropic, Google, etc.), they are encrypted in your browser with your public key before storage. We never receive or store plaintext keys at any point.

When the any-llm client needs a provider key, we use a cryptographic challenge-response system. The server sends an encrypted challenge that only your private key can solve. Once you prove ownership, the server releases your encrypted provider key. The client decrypts it locally, uses it to call the LLM provider, and then reports back token usage metadata—never your actual prompts or responses.

This architecture means that even we, as the service operators, cannot decrypt your API keys. Your sensitive credentials are protected by modern cryptography.

Integration with the any-llm Ecosystem

The any-llm Managed Platform is a core part of the choice-first stack and integrates directly with any-llm and any-llm-gateway. When you configure any-llm gateway or any-llm sdk with your ANY_LLM_KEY file, they can automatically retrieve the appropriate provider keys for each request from any-llm managed platform.

Security and Compliance

Security is at the core of any-llm managed platform's design. Because we use client-side authenticated encryption (XChaCha20-Poly1305), your API keys are encrypted before they leave your device, making them mathematically inaccessible to the service, including Mozilla.ai.

For organizations with strict data governance requirements, the privacy-first logging model means you can track usage and costs without storing sensitive content. This makes it easier to satisfy data privacy regulations while maintaining the observability you need for production systems.

The service is currently in alpha.

If you’d like to test it ahead of GA, you can join the waitlist here: Google Form.